Introduction

This page is meant to be treated as a follow-up to this scaler comparison done with ImageMagick. I'm only going to talk about niche topics here, so just refer to that other page if you only want to read about scalers.

The Effect of the Downsampler in Upsampling Evaluation

All luma doublers are trained with artificial data, which means the LR images are obtained by downsampling the HR references. The implication here is that if the test procedure matches whatever was done to train a model, the model will obviously score higher. This creates an unfair playing ground if these models are trained differently, as testing with images downsampled in linear light for example would greatly favour any models trained with images that were equally downsampled in linear light. The choice of filter is also very important, as different filters produce images with different characteristics.

Downsampling in linear light dilates bright structures while eroding dark ones relative to downsampling in gamma light. This is generally accepted as correct but it might not always be ideal depending on the content.

Interestingly, most models seem to show better reconstruction quality when you feed them LR images that have been downsampled in gamma light, and pretty much all mpv shaders have been trained with data downsampled in gamma light (including NNEDI3, FSRCNNX, RAVU, ArtCNN, etc). If we use images that have been downsampled in linear light, the model will produce images with dilated dark highlights and eroded bright features. The exact opposite of what happens when you downsample in linear light. The model is simply learning how to "undo" what linear light downsampling "does", so this behaviour makes perfect sense.

For these reasons, the upsampling tests will be done with an image downsampled in gamma light.

Upsampling Shaders

I still do not have a good way of automating mpv tests and therefore I'll have to stick to a single test image, which is going to be the luma portion of kumiko.png:

Testing with a single image makes this very unscientific, but it is what it is.

Upsampling Methodology

The following shaders were "benchmarked":

I've also added a few built-in filters to the mix just to have some reference points.The test image is downsampled with:

magick convert kumiko.png -filter box -resize 50% downsampled.png

The image is then upsampled back with:

mpv --no-config --vo=gpu-next --no-hidpi-window-scale --window-scale=2.0 --pause=yes --screenshot-format=png --sigmoid-upscaling --deband=no --dither-depth=no --screenshot-high-bit-depth=no --glsl-shader="path/to/meme/shader" downsampled.png

Upsampling Results

| Shader/Filter | MAE | PSNR | SSIM | MS-SSIM | MAE (N) | PSNR (N) | SSIM (N) | MS-SSIM (N) | Mean | ||

| ArtCNN_C4F32 | 2.25E-03 | 43.4749 | 0.9923 | 0.9986 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | ||

| ArtCNN_C4F16 | 2.43E-03 | 42.6808 | 0.9916 | 0.9985 | 0.9447 | 0.9148 | 0.9639 | 0.9855 | 0.9522 | ||

| ravu-zoom-ar-r3 | 3.14E-03 | 39.3867 | 0.9880 | 0.9982 | 0.7247 | 0.5613 | 0.7719 | 0.8670 | 0.7313 | ||

| ravu-lite-ar-r4 | 3.17E-03 | 39.6571 | 0.9878 | 0.9982 | 0.7131 | 0.5904 | 0.7588 | 0.8613 | 0.7309 | ||

| ravu-lite-ar-r3 | 3.26E-03 | 39.4102 | 0.9873 | 0.9981 | 0.6869 | 0.5639 | 0.7342 | 0.8566 | 0.7104 | ||

| ravu-zoom-ar-r2 | 3.26E-03 | 38.8482 | 0.9872 | 0.9981 | 0.6854 | 0.5036 | 0.7279 | 0.8426 | 0.6899 | ||

| ravu-lite-ar-r2 | 3.31E-03 | 38.7971 | 0.9870 | 0.9982 | 0.6703 | 0.4981 | 0.7172 | 0.8634 | 0.6872 | ||

| lanczos | 4.63E-03 | 36.5113 | 0.9799 | 0.9977 | 0.2600 | 0.2528 | 0.3407 | 0.7202 | 0.3934 | ||

| polar_lanczossharp | 4.85E-03 | 36.0673 | 0.9786 | 0.9974 | 0.1936 | 0.2052 | 0.2686 | 0.6012 | 0.3171 | ||

| bilinear | 5.47E-03 | 34.1550 | 0.9735 | 0.9955 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

Upsampling Commentary

As we can see in the table above, ArtCNN is seems to be the best option when it comes to luma doubling.

For lower scaling factors between 1x and 2x, you can expect this list to remain mostly the same as long as you use a sharp downsampling filter for the luma doublers. The choice of filter actually has a huge impact on how sharp the final image will be, so sticking to the hermite default makes all doublers much softer than ravu-zoom for example. Also keep in mind that the difference between the shaders also becomes smaller as you decrease the scaling factor.

Chroma Shaders

Using real anime content to test chroma shaders is difficult because we almost never have it available without chroma subsampling, so a promotional art will be used instead:

Chroma Methodology

The following shaders were "benchmarked":

A "near lossless" 420 version of aoko.png was created:

avifenc aoko.png --min 0 --max 0 -y 420 420.avif

mpv options remains the same with the exception that we don't need

Chroma Results

| Shader/Filter | MAE | PSNR | SSIM | MS-SSIM | MAE (N) | PSNR (N) | SSIM (N) | MS-SSIM (N) | Mean | ||

| ArtCNN_C4F32_Chroma | 3.07E-03 | 43.6766 | 0.9911 | 0.9977 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | ||

| cfl | 3.73E-03 | 39.9866 | 0.9895 | 0.9977 | 0.8227 | 0.6108 | 0.9007 | 0.9923 | 0.8316 | ||

| cfl_lite | 3.81E-03 | 39.7656 | 0.9892 | 0.9976 | 0.8018 | 0.5875 | 0.8822 | 0.9668 | 0.8096 | ||

| krigbilateral | 4.22E-03 | 38.6022 | 0.9873 | 0.9976 | 0.6906 | 0.4647 | 0.7611 | 0.9518 | 0.7171 | ||

| fastbilateral | 4.37E-03 | 37.1016 | 0.9858 | 0.9974 | 0.6501 | 0.3064 | 0.6640 | 0.7962 | 0.6042 | ||

| jointbilateral | 4.60E-03 | 37.3093 | 0.9852 | 0.9973 | 0.5887 | 0.3283 | 0.6275 | 0.7394 | 0.5710 | ||

| lanczos | 5.70E-03 | 36.3881 | 0.9800 | 0.9972 | 0.2947 | 0.2312 | 0.2975 | 0.5954 | 0.3547 | ||

| polar_lanczossharp | 5.89E-03 | 35.9492 | 0.9795 | 0.9972 | 0.2442 | 0.1849 | 0.2628 | 0.6217 | 0.3284 | ||

| bilinear | 6.80E-03 | 34.1966 | 0.9753 | 0.9965 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

Chroma Commentary

If we look at the numbers, ArtCNN is easily the best option. I actually wouldn't recommend using your resources on this unless you're playing native resolution video and your GPU has nothing better to do.

The other shaders all suffer from chromaloc issues and they can be easily fooled when there's no correlation between luma and chroma. I'd personally skip all of them.

Antiring

Antiringing solutions is a topic that I hadn't covered in the previous iteration of this page, but now that we have more than a single option we can also compare them.

In short, antiringing filters attempt to remove overshoots generated by sharp resampling filters when they meet a sharp intensity delta. What is commonly referred to as ringing is simply consequential to the filter's impulse response.

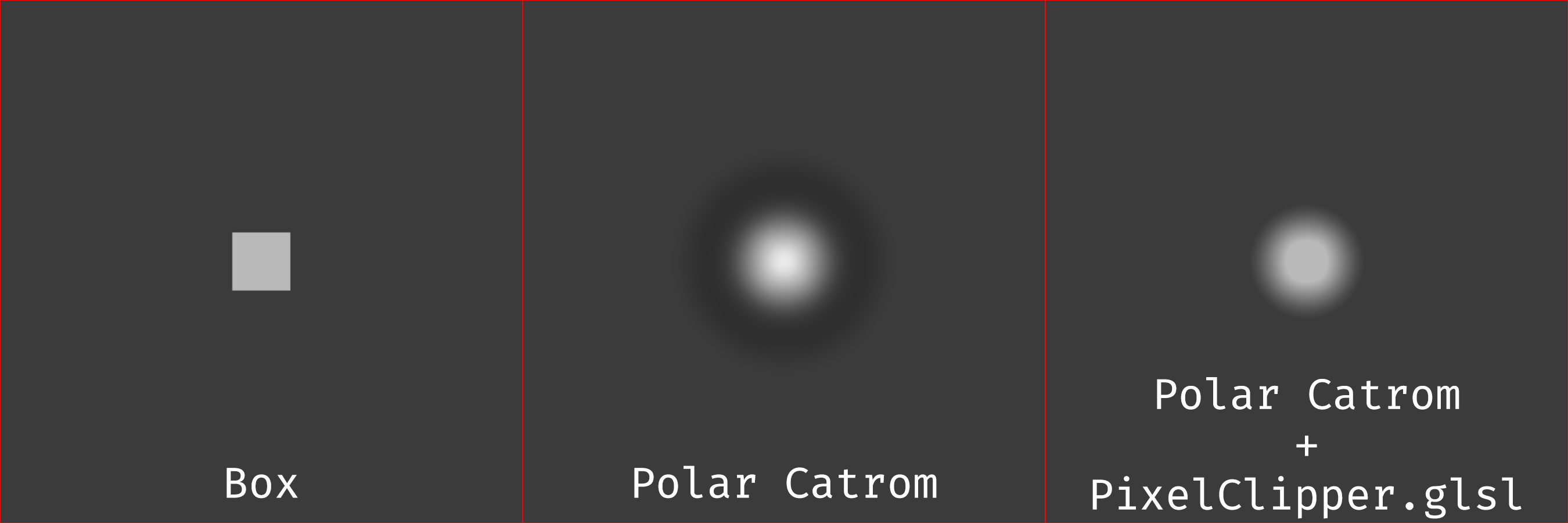

The following image shows this very well:

The negative weights in the filter are there for it to be able to quickly respond to high-frequency transitions, but it makes the filter overshoot a little bit before reaching its final destination. The "intensity" of the ringing is directly related to the magnitude of the secondary lobes. The second lobe, which is almost always negative, is responsible for the overshooting in can see in this example, but filters with more lobes ring once per lobe, and the ringing can be "positive" as well (within the range set by the original pixels) with positive lobes. The "length" of the rings is directly related to the length of the lobes, which is why filters like polar lanczos have "longer" rings (the zero crossings don't fall exactly at the integers, but rather slightly after them).

Antiring Methodology

The methodology here almost is equal to the one used for upsampling, with the only difference being that we have to include

AR is only really necessary when you're using sharp filters, it makes no sense alongside blurry filters because blurry filters don't ring hard enough for it to be noticeable. There are a few sharp memes that are worth trying with AR though if you feel adventurous, but generally speaking I think polar lanczossharp is pretty well balanced (a bit blurry even).

I previously had Pixel Clipper in this comparison but I don't think that's necessary anymore. This shader was written to fill the hole of mpv not supporting polar AR at the time, but this is not true anymore. Pixel Clipper remains relatively useful if you want downscaling AR, but for upscaling you're probably better off using the native solution.

Antiring Results

| Filter | MAE | PSNR | SSIM | MS-SSIM | MAE (N) | PSNR (N) | SSIM (N) | MS-SSIM (N) | Mean | ||

| polar_lanczossharp_ar_80 | 4.51E-03 | 36.1752 | 0.9808 | 0.9975 | 0.9176 | 0.9824 | 0.9401 | 0.3244 | 0.7911 | ||

| polar_lanczossharp_ar_75 | 4.54E-03 | 36.1713 | 0.9807 | 0.9975 | 0.8791 | 0.9633 | 0.9125 | 0.4005 | 0.7889 | ||

| polar_lanczossharp_ar_85 | 4.50E-03 | 36.1777 | 0.9809 | 0.9975 | 0.9501 | 0.9945 | 0.9629 | 0.2471 | 0.7886 | ||

| polar_lanczossharp_ar_70 | 4.56E-03 | 36.1663 | 0.9807 | 0.9976 | 0.8360 | 0.9390 | 0.8813 | 0.4799 | 0.7841 | ||

| polar_lanczossharp_ar_90 | 4.48E-03 | 36.1787 | 0.9809 | 0.9975 | 0.9752 | 0.9994 | 0.9799 | 0.1694 | 0.7810 | ||

| polar_lanczossharp_ar_65 | 4.58E-03 | 36.1600 | 0.9806 | 0.9976 | 0.7886 | 0.9087 | 0.8452 | 0.5512 | 0.7734 | ||

| polar_lanczossharp_ar_95 | 4.48E-03 | 36.1788 | 0.9809 | 0.9975 | 0.9923 | 1.0000 | 0.9924 | 0.0857 | 0.7676 | ||

| polar_lanczossharp_ar_60 | 4.61E-03 | 36.1528 | 0.9805 | 0.9976 | 0.7389 | 0.8734 | 0.8058 | 0.6213 | 0.7599 | ||

| polar_lanczossharp_ar_100 | 4.47E-03 | 36.1775 | 0.9809 | 0.9974 | 1.0000 | 0.9934 | 1.0000 | 0.0000 | 0.7483 | ||

| polar_lanczossharp_ar_55 | 4.64E-03 | 36.1438 | 0.9804 | 0.9976 | 0.6850 | 0.8299 | 0.7597 | 0.6839 | 0.7396 | ||

| polar_lanczossharp_ar_50 | 4.67E-03 | 36.1339 | 0.9802 | 0.9976 | 0.6292 | 0.7815 | 0.7102 | 0.7393 | 0.7151 | ||

| polar_lanczossharp | 5.00E-03 | 35.9731 | 0.9785 | 0.9977 | 0.0000 | 0.0000 | 0.0000 | 1.0000 | 0.2500 |

Antiring Commentary

As you can see in the table above, the sweetspot for libplacebo's AR seems to be around 0.8 taking all the metrics into account. MS-SSIM is the only metric that does not like AR, and this behaviour can be easily explained by the fact that it evaluates the image at various scales to come up with a score. Having some controlled overshoots is helping the image reach the intended intensity levels after downscaling here, which is why MS-SSIM disagrees with SSIM (the metric it's based on). MS-SSIM supposedly models a viewer looking at the image from various viewing distances so you could make the argument that ringing becomes a smaller problem as you move away from the display (and, in this case, you could also say that some ringing is actually good).

If we remove MS-SSIM from the equation the image with full AR becomes the top scorer and the list goes down from there perfectly in line with AR strength. Please remember that these numbers vary with the image, filter and scaling factor.

Downsampling Antiring

There's no fundamental difference in the math behind how output pixels are computed as far as filter weights are concerned, so filters with more than a single lobe will also ring when going down. This is often not as jarring and/or noticeable because the filters we use to downscale are often less sharp and therefore their overshoots are less pronounced. mpv defaults to Hermite which doesn't ring at all.

Using polar filters to downscale in mpv is very resource intensive and generally just unnecessary, so I'll switch to Catrom in this section. Downscaling with Catrom produces fairly sharp results, but it's not comically sharp. This filter is perfectly usable even without AR.

Native downscaling AR still seems to be broken at the time of writing, so I'll also only include numbers using Pixel Clipper here.

Downsampling Antiring Methodology

My current method to evaluate downsampling filters is to concede that at 0.5x linear light box is as good as it gets, so I'll be using that as the "reference point" here.

The same kumiko.png test image was used to test downsampling AR, and it was downsampled with mpv as follows:

mpv --no-config --vo=gpu-next --gpu-api=vulkan --no-hidpi-window-scale --pause=yes --screenshot-format=png --deband=no --dither-depth=no --screenshot-high-bit-depth=no --correct-downscaling=no --linear-downscaling=yes --window-scale=0.5 --dscale=box kumiko.png

Some of these flags are redundant and the default behaviour, but please notice how you have to disable correct downscaling to get a real box filter (it'll get its radius extended and produce very blurry results otherwise).

The images were basically generated using the following command:

mpv --no-config --vo=gpu-next --gpu-api=vulkan --no-hidpi-window-scale --pause=yes --screenshot-format=png --deband=no --dither-depth=no --screenshot-high-bit-depth=no --correct-downscaling=yes --linear-downscaling=yes --window-scale=0.5 --dscale=catmull_rom --glsl-shader="PixelClipper.glsl" kumiko.png

Downsampling Antiring Results

| Filter | MAE | PSNR | SSIM | MS-SSIM | MAE (N) | PSNR (N) | SSIM (N) | MS-SSIM (N) | Mean | ||

| catrom_pc_100 | 1.48E-03 | 45.3975 | 0.9971 | 0.9996 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | ||

| catrom_pc_95 | 1.51E-03 | 45.3115 | 0.9971 | 0.9996 | 0.9511 | 0.9764 | 0.9804 | 0.9941 | 0.9755 | ||

| catrom_pc_90 | 1.54E-03 | 45.1996 | 0.9970 | 0.9996 | 0.8988 | 0.9456 | 0.9533 | 0.9742 | 0.9430 | ||

| catrom_pc_85 | 1.57E-03 | 45.0609 | 0.9970 | 0.9996 | 0.8456 | 0.9075 | 0.9201 | 0.9436 | 0.9042 | ||

| catrom_pc_80 | 1.60E-03 | 44.8991 | 0.9969 | 0.9996 | 0.7918 | 0.8630 | 0.8815 | 0.9031 | 0.8598 | ||

| catrom_pc_75 | 1.63E-03 | 44.7168 | 0.9968 | 0.9996 | 0.7394 | 0.8129 | 0.8405 | 0.8655 | 0.8146 | ||

| catrom_pc_70 | 1.66E-03 | 44.5193 | 0.9967 | 0.9995 | 0.6873 | 0.7586 | 0.7988 | 0.8358 | 0.7701 | ||

| catrom_pc_65 | 1.69E-03 | 44.3107 | 0.9966 | 0.9995 | 0.6334 | 0.7013 | 0.7484 | 0.7838 | 0.7167 | ||

| catrom_pc_60 | 1.72E-03 | 44.0984 | 0.9966 | 0.9995 | 0.5818 | 0.6429 | 0.7004 | 0.7456 | 0.6677 | ||

| catrom_pc_55 | 1.75E-03 | 43.8862 | 0.9965 | 0.9995 | 0.5311 | 0.5846 | 0.6505 | 0.7033 | 0.6174 | ||

| catrom_pc_50 | 1.78E-03 | 43.6738 | 0.9964 | 0.9995 | 0.4796 | 0.5262 | 0.5935 | 0.6386 | 0.5595 | ||

| catrom | 2.05E-03 | 41.7596 | 0.9953 | 0.9994 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

Downsampling Antiring Commentary

The first thing we can see is that AR seems to be a net-positive in general even taking MS-SSIM into account this time. The scores go down almost linearly and the difference is pretty sizeable.

As far as image quality goes there's probably no reason to be against using Pixel Clipper if you're downscaling with sharp filters. Your only concern should be performance, and since PC needs its own shader pass the performance impact might not be negligible.

Outro

I want to make it clear though that you shouldn't take the results as gospel. Mathematical image quality metrics do not always correlate perfectly with how humans perceive image quality, and your personal preference is entirely subjective. You should take this page as what it is, a research that produces numbers, but you should not take these numbers for granted before understanding what they actually mean.